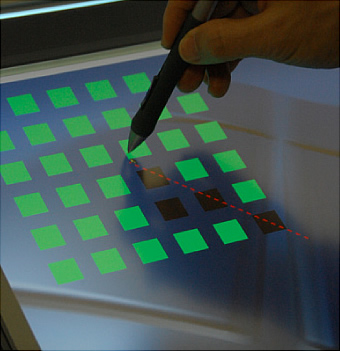

Pen-based Interaction

Multi-touch Interaction

Current multi-touch interaction techniques typically only use the x-y coordinates of the human finger’s contact with the screen. However, when fingers contact a touch-sensitive surface, they usually approach at an angle and cover a relatively large 2D area instead of a precise single point. In our work, a Frustrated Total Internal Reflection (FTIR) based multitouch device is used to collect the finger imprint data. We designed a series of experiments to explore human finger input properties and identified and leveraged several useful properties such as contact area, contact shape and contact orientation that allow user to communicate information to a computer efficiently and more smoothly. New generation user interfaces can fully use these natural properties to answer the challenges that lie beyond the realm of today’s mouse-and-keyboard paradigm.

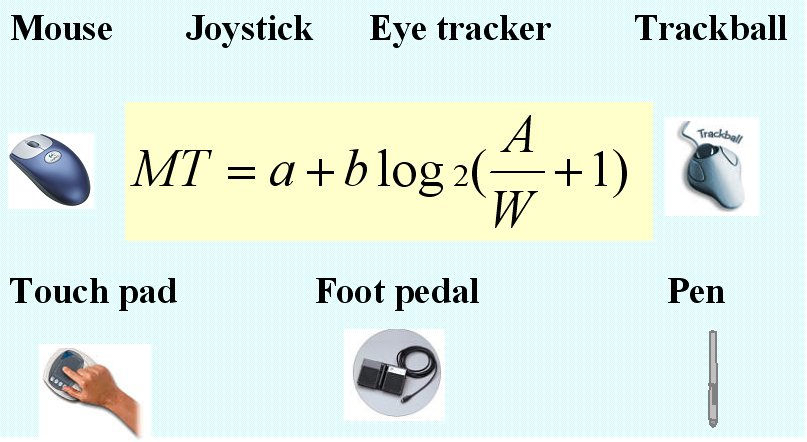

Human performance modeling

The development of human performance models has an important place in respect to both theory and practice in the field of human computer interaction. However, current studies focus on the effect of system factors (e.g, target width and amplitude between the targets) on movement time. This project has sought to establish models that can accurately include human physiological and psychological information (e.g., subjective factors) into mathematical functions. Such models will promote accuracy and will be applicable in human computer interaction input devices evaluation. We have studied systematically both linear models and nonlinear models through basic computer tasks such as pointing tasks and steering tasks. A number of new human performance models were established. Comparing the traditional models, these models include not only the system layers (i.e. target width and distance to the target) but also the subjective layers.

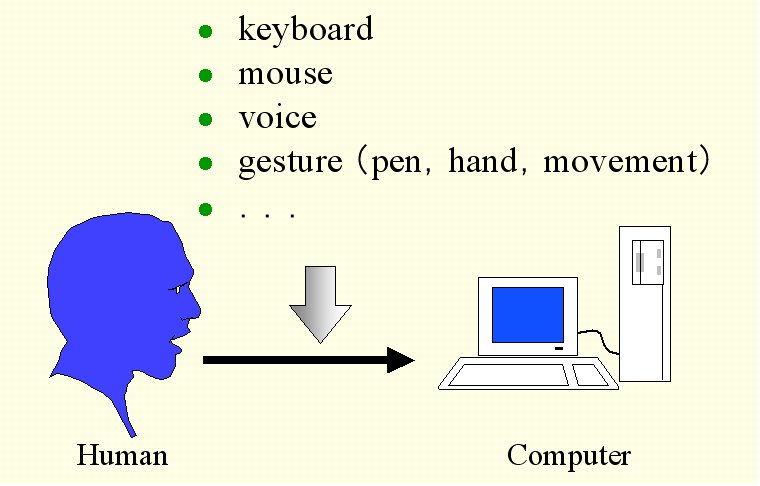

Eye-based Interaction

Eye tracking is a promising solution to alleviate the asymmetrical bandwidth problem existing in human-computer interaction (HCI), but it is still problematic because of issues like the “Midas-touch” problem, limitations in tracking accuracy, calibration errors and drift, and especially eye cursor’s instability mainly resulting from the inherent jittery motions of the eyes. In order to improve the stability of eye cursor, a set of new methods were proposed to modulate eye cursor trajectories by counteracting eye jitter. Our experimental results indicate that these methods are especially effective in improving the stability of eye cursor in eye-based interaction. Furthermore, a unique quantitative model for dwell-based eye pointing tasks was also proposed to the Human computer interaction community.

Haptic interaction

A number of fundamental studies about applying haptic input and output modalities were performed through the empirical research strategy. They contribute to our basic understanding of the relationship between different haptic modalities and human performance in fundamental human computer interaction tasks. They also provide insights and implications for future designs incorporating haptic modalities into natural interactions.

Evaluation on multimodal interfaces

This study sought to identify the best combinations of modalities through usability testing. We asked the question that how users choose different interaction modes when they work on a particular application? We have conducted two experiments to compare interaction modes on a CAD system and a map system. The experiments provided information on how users adapt to each interaction mode and the ease with which they are able to use these modes.

Interactive techniques and text input in immersive virtual environments

Direct manipulation by hand is an intuitive and simple way of positioning objects in an immersive virtual environment. However, this technique is not suitable for making precise adjustments to virtual objects in an immersive environment because it is difficult to hold a hand unsupported in midair and to then release an object at a fixed point. We proposed an alternative technique using a virtual 3D gearbox widget, which enables users to adjust values precisely.

Cooperative work in a distributed immersive virtual environment,which connects virtual reality systems with immersive projection displays such as CAVE, usually requires users to put annotations in the virtual world. Simple 3D symbols or 3D icons are useful in a fixed task; however, simple symbolical annotations are insufficient for more general cooperative work because complex abstract annotations cannot be represented by them. Therefore, we need textual annotations in an immersive projection display system. We have conducted a pilot experiment to evaluate text input methods using handheld devices for immersive virtual environments.

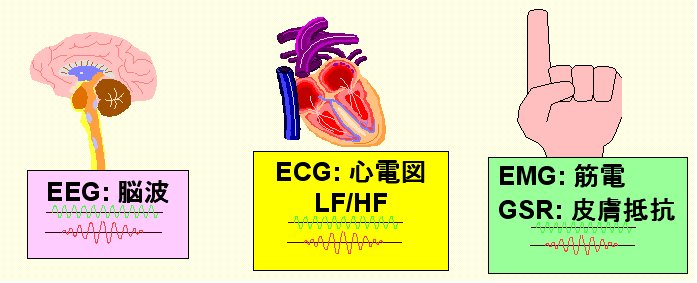

Physiological indices for testing user fatigue

We developed a novel usability method called "Task Break Monitoring'' (TBM) for monitoring users' physiological indices when using the computer. In this method, users take a break with their eyes closed after each interaction with the computer. During each break, electroencephalogram (EEG), especially alpha 1 waves, electrocardiogram (ECG) and galvanic skin resistance (GSR) are monitored and recorded. We believe "TBM'' to be an important innovation in human computer research and development because the after effects of computer use have an obvious bearing on recovery time, user endurance and psychological attitude to the technology in general.